Basic Backup Plans for PCLinuxOS

by horusfalconMost PCLinuxOS Forum members have seen the flat statement you might want to back up your system before doing {whatever} innumerable times, just like that. Just as if backing up a system were as easy as flipping a switch. Modern systems range into the hundreds of Gigabytes in storage, and even, in some cases, into the Terabytes. Backing up that much data is not exactly a trivial task. It is, however, a manageable task, and one that should be managed systematically for best effect. Backup strategies can range from those implemented by enterprise IT departments, all the way to simpler strategies for those of us using PCLinuxOS on their desktop or laptop machines.

Instead of considering the truly broad range of possibilities here, we are, instead, going to focus on devising a backup plan for a “typical desktop” system that might be representative of most PCLinuxOS users’ systems. This mythical system will have a fast dual-core 64-bit processor, two Gigabytes of RAM, a multi-format DVD/RW burner, and a 500 Gigabyte hard drive. Plan From The Beginning So how would we begin to back up such a system? It would be best to begin before the system software is installed, by setting up the system's partitioning scheme such that individual partitions compartmentalize system data away from user data.

It would also pay well to become familiar with the Linux Filesystem Hierarchy standard so that a familiarity with where system data is stored is attained. Knowing where the various data files are in a Linux system makes it easier to be selective when doing backups.

System Data: Baseline Configuration

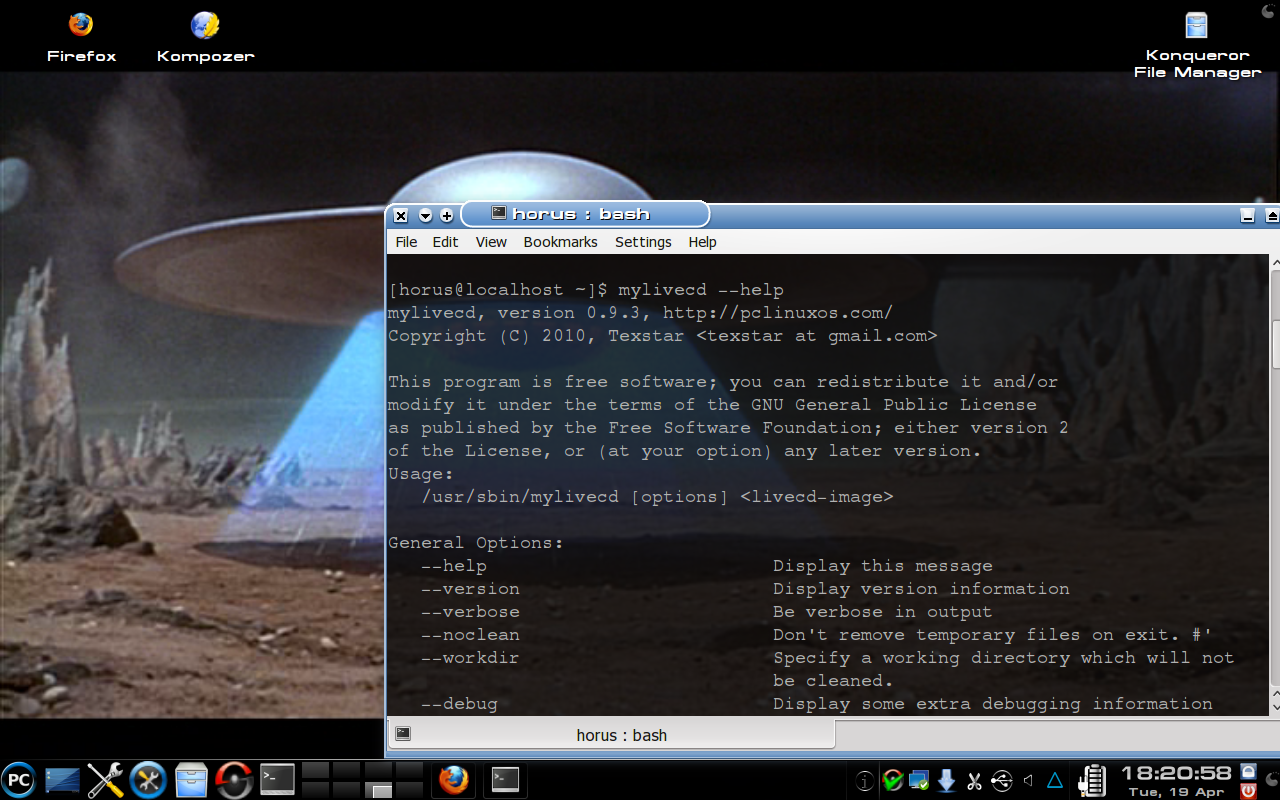

Backup Once the system is installed and all the applications and user accounts are fully configured to suit their users, make a live CD or DVD backup or an image of the baseline configuration using either mylivecd (for a live backup) or Clonezilla Live (for an image). How one decides which of these two methods to use is really a matter of individual choice, but the whole point of doing this exercise is to provide the user with a full backup of the basic system as it existed immediately after post-install configurations. It represents a starting point in the backup plan. Each of these two methods has its advantages and disadvantages: mylivecd is built-in to PCLinuxOS as 'standard equipment'.

It is a command-line tool and has something of a learning curve, but it works reliably enough and produces a live backup that can not only restore a system but also serve to operate it temporarily for other purposes (such as data recovery if files need to be saved and backed up before recovery from a failure is started.)

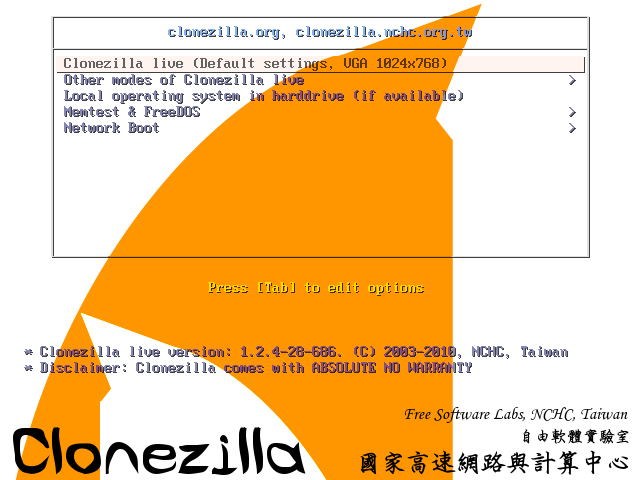

Clonezilla Live, on the other hand, is an entirely separate product, a Linux distribution in its own right, and produces an image (which is to say a monolithic set of files) from which a system can be re-installed. It does not produce a live disk, and this image is not capable of being selectively restored - it is an all-or-nothing proposition, destroying any existing data on the disk or partition to which it is restored.

With all this, it would seem to have little advantage over mylivecd, but this is not so. Where Clonezilla shines is speed and compact size. (As an example, I used Clonezilla Live to backup a critical Windows Server 2008 machine at work, a Dell R410 rack server with 1 TB of total storage set up as a 500 Gigabyte mirrored RAID. I placed the image on an external USB 2.0 Seagate Free Agent hard drive. The total uncompressed data load was some 35 Gigabytes in size, and the compressed image set was on the Seagate in 116 seconds, compressed to 17.4 Gigabytes. The server was offline for less that twenty minutes total. I have since restored this image to our new identical spare server and tested it successfully.)

The other advantage that Clonezilla images have is that they can be multicast using Clonezilla Server Edition to configure several machines simultaneously over a network. I’ve never personally done this, and recommend that anyone interested check the Clonezilla SE website for more information. One last thing and we'll move on: Clonezilla Live has a beginner mode. It still behooves the user to read carefully the instructions on each screen before making decisions, and to become familiar with how storage devices are named when gaining familiarization with Clonezilla's proper use. (I may do a follow-up to this article showing how to make and restore Clonezilla images and how to use mylivecd at a later date.)

Application Data Backups

Applications are the programs we use with PCLinuxOS. By and large, these are provided via the Software Repositories by Synaptic package manager, or from the installation media.If we've just made a baseline configuration backup, it includes applications too, right? So why am I talking about application data backups now? It's simple, really: PCLinuxOS is a rolling release and, more especially since KDE 4 has shipped, the Packaging Team is always aggressively releasing updates. With each set of updates, applications are changing on our system, so it becomes necessary to implement a backup plan that covers this change.

Because of how applications are handled in PCLinuxOS, it is well to place system and application data on separate partitions, or, failing that, to place system and applications data on a single partition separate from user data (PCLinuxOS does this by default). In our typical system, 12 Gigabytes have been set aside for system and applications, four Gigabytes are reserved for a swap partition, and the rest is set aside for /home, which stores all the user data, so it makes sense to back up systems and applications at the same time. It would be convenient enough to use Clonezilla Live to backup just the system and applications data partition at regular intervals to an image, but let's not be the guy who just has a hammer in his toolkit. There are other tools that can be used, and some of them offer very sophisticated capabilities (e.g. scheduled, unattended backups, incremental and differential backups, etc.) which are worth looking at.

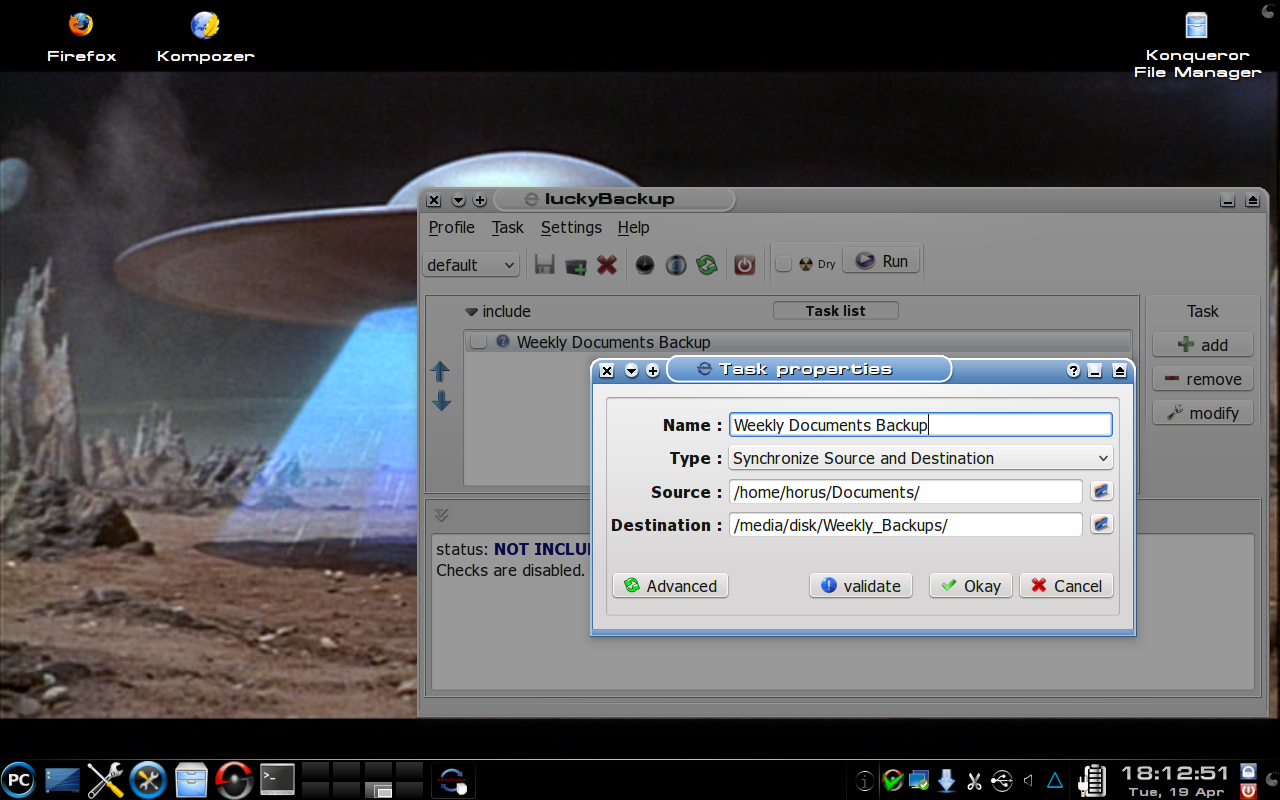

I pulled down and installed several other tools from the repositories prior to writing this, and I have to say, I'm sticking with what I know: luckyBackup. This little gem is actually a slick GUI to control and use rsync, a command line tool for use in making compressed backup sets.

The manual for luckyBackup is decent enough, but it's easy to tell it was written by a programmer and not a technical writer. This is not a fatal shortcoming, though, and the author does cover the program's proper use and feature set well enough to get a handle on how to use it safely. Run luckyBackup as the super-user (root) for best results when backing up or restoring system and applications data. (Again, luckyBackup could be the subject of another article at a later date, but it is simple enough to use that some light reading in the manual should get anyone wanting to know how to use it where they need to be relatively quickly.)

Regardless of which backup method you use, the medium for the backup should be dedicated solely to backup purposes. Resist the temptation to use the same removable drive you use for music or other storage as a backup drive! There's no worse feeling that realizing that you just deleted your backups by mistake! Make the backups, then shut down and remove the drive from the system, and put it away in a safe location when not in use. If the data on the drive is sufficiently important, consider a lockable storage to keep it safe. How often should system and application data be backed up? A good gauge for this would be;every time it changes or is about to change significantly.

Why that last bit about is about to change? Because right before a major upgrade it's a good idea to preserve the present system state in case something unexpected goes wrong. Is it necessary to schedule unattended backups? That really depends on how critical the system is, how valuable its output is, and how inconvenient scheduled backups might be. Regardless of inconvenience or other factors, though, it's a good idea to establish regular backup habits for all data covered by your plan. If, for example, we were to schedule a backup to be taken at 03:00 in the morning on Friday, we'd have the comfort of knowing that our data sets would be less than a week old in the event of a crash.

User Data: The Challenges of Growth

Data users place on a system can be thought of as coming from two sources: downloads or copied files such as music, video and other media files, and original output (personal photos, video, music, spreadsheets, documents, email, bookmarks, etc.) produced by the users using applications. PCLinuxOS (as most Linux distributions do) also includes user-specific configuration data in a user's home directory. In the beginning, user data might not be a lot, but as system use continues in time, user data sets on a system will almost certainly grow. The simplest approach to backing up user data is to copy the entire /home partition to an external hard drive large enough to hold it, but as the data set grows, so will the need for capacity in the backup storage device. Compressed backups such as those produced by Clonezilla Live or luckyBackup can help offset this, but there needs to be an evaluation here of how to most efficiently back up this growing data set.

We need to look at how often data in the user space is changing, and consign relatively static data to archival media for long-term storage. In our typical desktop there's over 440 Gigabytes of capacity for storage of user data. Wow. That's a lot of data. How often is most of that changing? If our user is a music and movie lover, maybe not very often. The best path here is to copy multimedia and other relatively static file sets to removable optical media (DVD+-R/RW or CD-R/RW) using a tool such as k3b, and to store those disks safely until we need them. Exclude such static data from the regular weekly backups made with luckyBackup because they are already stored on a durable reliable medium.

We won't get into archive management here other than to say that optical media have a shelf life and should be checked at least every two or three years for readability, and media over seven years old should be copied to new disks for best results. (Obviously, it helps if disks are labeled as to the dates on which they are burned.) User data that changes often should be backed up per schedule, and this can be easily automated in luckyBackup to occur after the system and applications data backup or on another day of the week. Data that changes especially rapidly (work in progress) might be better off copied to a USB flash drive or a separate USB hard drive from the other backup sets so that it is readily available.

Summary

What we have done so far is discuss the bare bones of setting up a three-pronged approach to backup on a typical system: user and application data being backed up weekly by a luckyBackup automated task, and user data being handled by a separate task in luckyBackup, all begun with either a Clonezilla image or a live CD/DVD of the baseline configuration backup taken at installation. What we have not talked much about is web-based backup services.

This is an area in which I have little personal knowledge. Let me issue an invitation to any who have knowledge in this area to add to our discussions. What I hope we can talk about next time is the specifics of using the tools offered for consideration here.