Alternate OS: OpenIndiana, Part 2

by Darrel Johnston (djohnston)

There are two extremely powerful and innovative creations of the Solaris operating system. The first of these is DTrace, which is a dynamic instrumentation framework. The main components of the system are the DTrace framework and the instrumentation providers, both residing in the kernel. The main idea behind separating the two sub-components resides in the fact that different providers can have different instrumentation techniques. Instrumentation providers are loadable kernel modules that define a set of probes they can activate on demand. These probes are advertised to system users and can be identified by the following four criteria: <provider name, module, function, probe name>. System users can specify in a script file the four elements to activate a specific probe, or any subset of the four elements to activate all the probes that match the specified subset.

The D language, used for Dtrace scripting, is derived from a large subset of C, and allows access to the kernel's native types and global variables. Users can specify inside the D script file the providers' names, the probes' names, and the actions to take whenever each probe is hit. This file can then be compiled by the D compiler implemented in the DTrace library and invoked by the DTrace command. Upon execution, the DTrace framework enables the probes that were specified in the file by making appropriate calls to the probe's providers.

There are many features inherent in the framework that make systems administrators' and programmers' debugging tasks much easier. Examples of the D language and Dtrace's capabilities are way beyond the scope of this article. The DtraceToolkit, which is a collection of over 200 useful and documented DTrace scripts developed by Brendan Gregg, can be downloaded from the OpenSolaris.org's website, which now has Oracle branding.

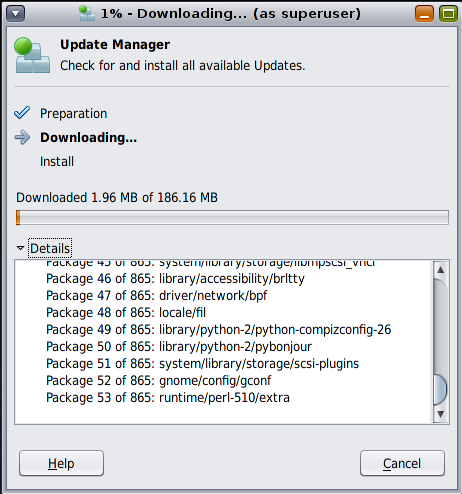

Like any good system administrator, I checked for any updates at the beginning of my last OpenIndiana session. There were a few. Eight hundred and sixty five, to be precise.

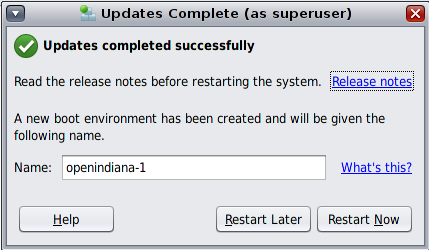

I wasn't sure why there were so many, until the downloaded packages had been installed and this dialog window popped up.

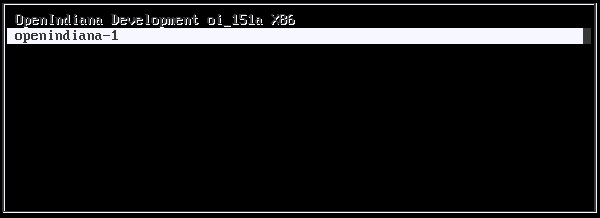

A new boot environment had been created and I was asked whether to reboot now or later. I chose now. After reboot, there was a new GRUB entry, selected by default.

After logging into the Gnome session, I saw no apparent changes. Let's see what they were, apart from all those packages. Before the update, uname -a showed SunOS openindiana 5.11 oi_151a i86pc i386 i86pc Solaris. After the update, it showed SunOS openindiana 5.11 oi_151a2 i86pc i386 i86pc Solaris . That's a kernel upgrade from alpha version 1 to alpha version 2, although the new boot environment is named openindiana-1. It was done on the running OS, updated in place, with only a few mouse clicks and supplying the root user's password. It sure beats upgrading a BSD kernel and userspace.

The second extremely powerful and innovative creation of the Solaris operating system is the ZFS file system. ZFS is a new kind of file system that provides simple administration, transactional semantics, end-to-end data integrity, and immense scalability. It is a fundamentally new approach to data management.

ZFS presents a pooled storage model that completely eliminates the concept of volumes and the associated problems of partitions, provisioning, wasted bandwidth and stranded storage. Thousands of file systems can draw from a common storage pool with each one consuming only as much space as it actually needs. The combined I/O bandwidth of all devices in the pool is available to all file systems at all times.

All operations are copy-on-write transactions, so the on-disk state is always valid. Every block is checksummed to prevent silent data corruption, and the data is self-healing in replicated (mirrored or RAID) configurations. If one copy is damaged, ZFS detects it and uses another copy to repair it.

ZFS introduces a new data replication model called RAID-Z. It is similar to RAID-5 but uses variable stripe width to eliminate the RAID-5 write hole (stripe corruption due to loss of power between data and parity updates). All RAID-Z writes are full-stripe writes. There's no read-modify-write tax, no write hole, and no need for NVRAM in hardware.

Disks can fail, so ZFS provides disk scrubbing. Similar to ECC memory scrubbing, all data is read to detect latent errors while they're still correctable. A scrub traverses the entire storage pool to read every data block, validates it against its 256-bit checksum, and repairs it if necessary. All this happens while the storage pool is live and in use.

ZFS has a pipelined I/O engine, similar in concept to CPU pipelines. The pipeline operates on I/O dependency graphs and provides scoreboarding, priority, deadline scheduling, out-of-order issue and I/O aggregation. I/O loads that bring other file systems to their knees are handled with ease by the ZFS I/O pipeline.

ZFS provides 2 64 constant-time snapshots and clones. A snapshot is a read-only point-in-time copy of a file system, while a clone is a writable copy of a snapshot. Clones provide an extremely space-efficient way to store many copies of mostly-shared data such as workspaces, software installations, and diskless clients.

You snapshot a ZFS file system, but you can also create incremental snapshots. Incremental snapshots are so efficient that they can be used for remote replication, such as transmitting an incremental update every 10 seconds.

There are no arbitrary limits in ZFS. You can have as many files as you want: full 64-bit file offsets, unlimited links, directory entries, and so on.

ZFS provides built-in compression. In addition to reducing space usage by 2-3x, compression also reduces the amount of I/O by 2-3x. For this reason, enabling compression actually makes some workloads go faster.

In addition to file systems, ZFS storage pools can provide volumes for applications that need raw-device semantics. ZFS volumes can be used as swap devices, for example. And if you enable compression on a swap volume, you now have compressed virtual memory.

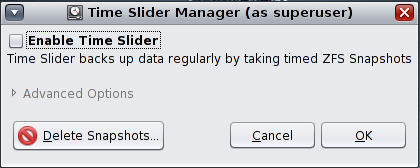

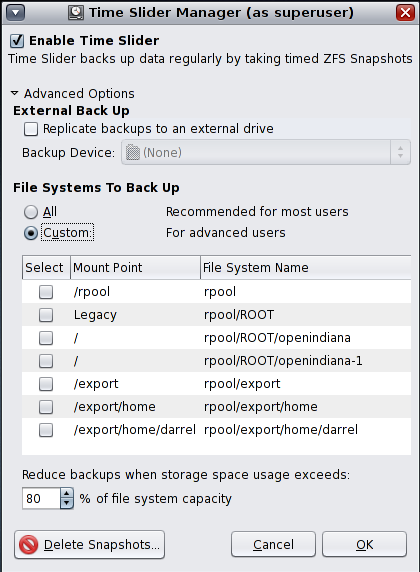

The constant-time snapshots are easily set up in OpenIndiana by using the time slider manager system utility. In the Gnome menu, go to System > Administration > Time Slider. After supplying root's password, you will see the Time Slider Manager window as shown below.

Check the Enable Time Slider box to start the service. But, wait! There's more. Click Advanced Options.

Backups can be stored on an external drive. You can selectively choose the mount points to back up. Note that the new /rpool/ROOT/openindiana-1 boot environment can be individually selected to exclude the older boot environment. The File Systems To Back Up choice is set to All by default. That's the one I chose. Once the OK button is clicked, a current system snapshot will be saved.

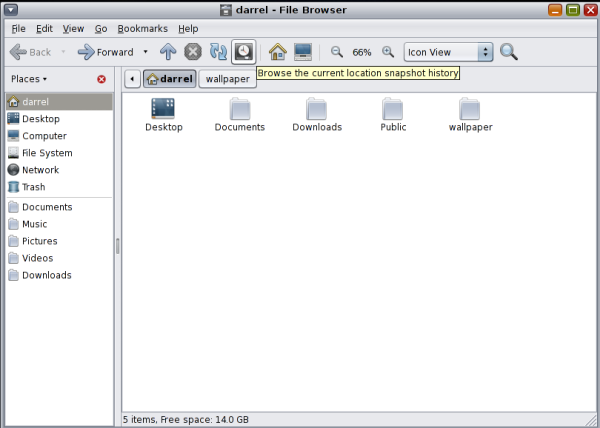

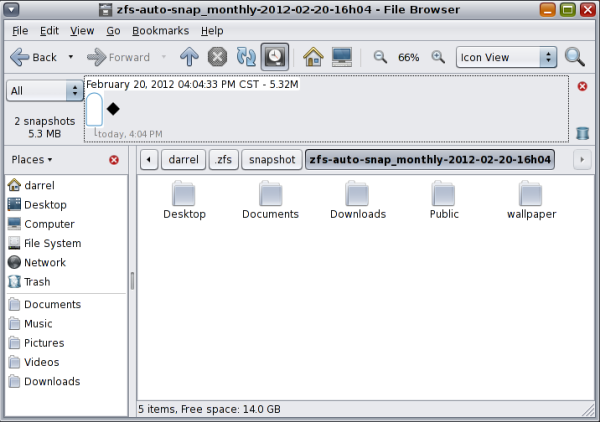

Once the time slider has been activated and is in use, the snapshot history icon in the Nautilus file manager is available for use.

Just as a quick test and demonstration, I moved the ~/wallpaper folder to ~/Documents/wallpaper.

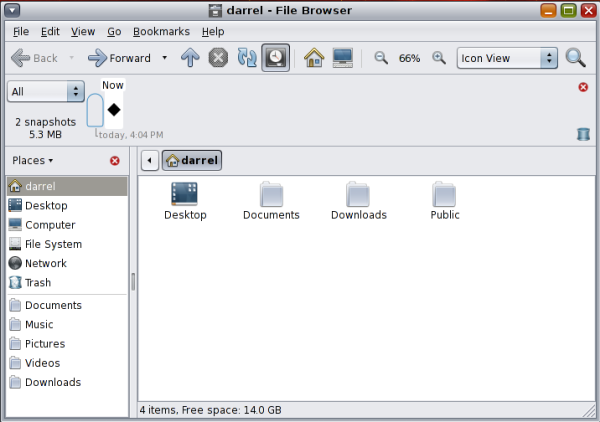

Then I selected the previous system snapshot in the file browser by clicking the vertical button to the left of the Now black diamond.

Voilà. The wallpaper folder is back in my home directory. We can attach a second SATA hard drive and add mirror capabilities by using a series of pfexec commands to replicate the first drive to the second one. Or, we can use the time slider GUI to use the second drive for ZFS snapshots. For more guidance on using ZFS, consult the Oracle Solaris ZFS Administration Guide.

Although the Mozilla applications are dated, (Firefox is version 3.6.12 and Thunderbird is version 3.1.4), and the choice of office applications is slim, I find OpenIndiana a pleasure to use. With the familiar Gnome 2.30.2 desktop environment, the very Synaptic-like package manager GUI and the included system tools, it feels much like a Linux system. That is, until you consider the “back in time” capabilities of ZFS and Time Slider and the advanced troubleshooting capabilities of DTrace. This OS is running with only 1GB of system RAM and a 20GB hard drive. df / shows only 19% of openindiana-1's rpool in use. And, although the previous and current OpenIndiana versions are both listed as alpha releases, the OS is extremely stable, if not yet feature complete. I experienced no mouse, keyboard or window drawing lag times whatsoever. None of the applications ever crashed. And with ZFS, file system checks are, hopefully, a thing of the past.