| Previous

Page |

PCLinuxOS

Magazine |

PCLinuxOS |

Article List |

Disclaimer |

Next Page |

Altered Reality: AI Generated Images |

|

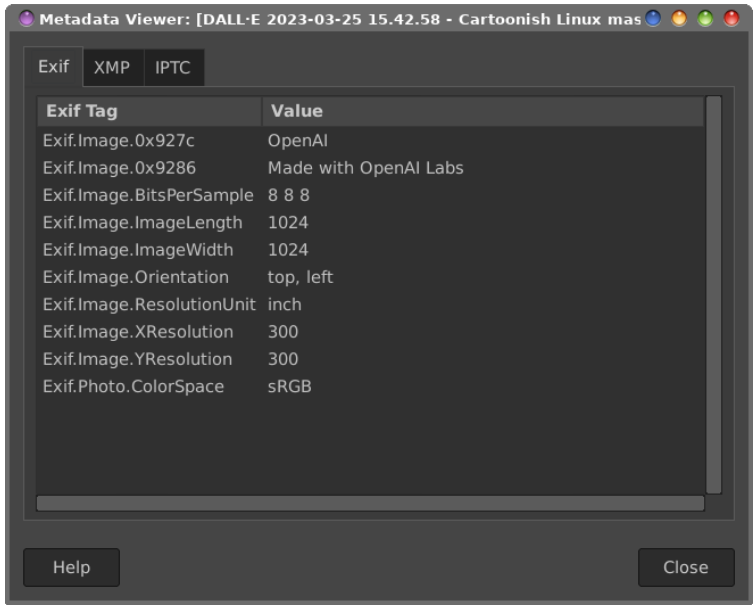

by Paul Arnote (parnote)

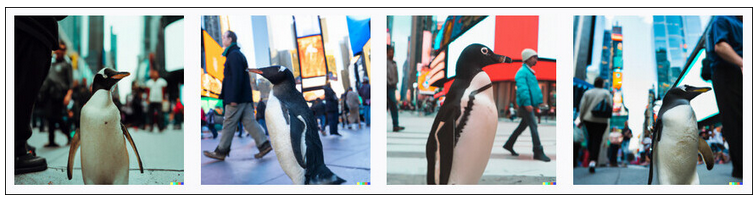

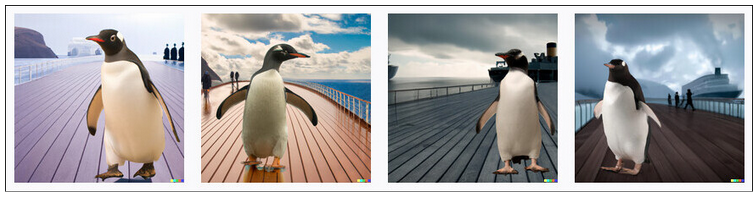

"A picture is worth a thousand words." "Pictures, or it didn't happen." "A photo doesn't lie." We've all most likely heard at least one of the above common sayings in describing images or pictures, if not all of them. And, until just very recently, there was a high level of "truth" in those sayings. But now, that line between what's real and what's not real has been blurred beyond distinction, blurring our perception of what is real and what is "Memorex." The entire computer world, as well as mainstream media outlets, has been abuzz within the past year with the emergence of GPT-4 AI content creation. You have probably already heard of ChatGPT or DALL-E. Reports about its abilities have permeated recent news cycles. The tech industry has certainly heard about it, as virtually everyone and their brother is jumping on the AI airship. Google has announced their upcoming use of AI to help power their search engine. Microsoft has announced what appears to be a full embrace of new AI capabilities. Amazon is preparing to launch their answer to the rush to AI power. Adobe has announced Firefly, their answer to the image creator DALL-E. Every week, it seems that one or more tech giants are embracing AI in one form or another. In fact, even the April 2023 cover image was generated by me (not that I count myself among "tech giants"), using simple text to describe the image I was wanting to create on the DALL-E AI image creator website (shown below).  Creating the image was as simple as describing what I wanted in the image, using plain text descriptors. For the above image, I entered "Cartoonish Linux mascot Tux dressed as the Easter Bunny, in a large green meadow with mounds of Easter candy and Easter eggs strewn all about." That generated four images for me to choose from, shown below. And boy, it created those images in like exceptionally little time. As in, just a matter of seconds.  As you can see, only ONE of the four images is actually Linux mascot Tux dressed as the Easter Bunny (second from the left). The other three depict an actual bunny as the Easter Bunny. For fun coupled with a bit of "what if," I repeated my search by slightly altering my image creation criteria. For this second round, my creation text read "Cartoonish Linux mascot Tux dressed as the Easter Bunny, in a large green meadow with mounds of Easter candy and Easter eggs strewn all about holding an Easter Basket." That generated another four images for me to choose from, shown below. Again, the images were generated and presented to me in a matter of seconds.  This time, all of the images were of a bunny posing as the Easter Bunny. It seems that the "Linux mascot Tux dressed as the Easter Bunny" part of my image creation text was completely ignored. The generated images were nice images, but the lack of Linux mascot Tux rendered the generated images unusable for my intended purpose. By this point, I was "stuck" on penguins in my image creation criteria. So, I repeated my creation request with different scenarios. In my first two image creation terms, I was deliberate about specifying a "cartoonish" image. So, how would DALL-E do producing photorealistic images? I was about to find out, in pretty short order. So, for my next "experiment" with DALL-E, I entered the following text as my image creation criteria: "Photo with penguin in the foreground, walking through Times Square." Below are the four images that DALL-E presented, based on that specific image creation criteria.  Has there ever been a penguin walking in Times Square? I don't know. Maybe. But I wanted to "test" to see how realistic of an image DALL-E would present. To be perfectly honest, I was blown away with how genuine and realistic the images appeared. Again, the images were created and presented to me in a matter of seconds. But my curiosity wasn't satiated yet. I decided to do another test, with "Photo with penguin in the foreground, walking on the Titanic" as my image creation criteria. Below are the results.  Once again, these were nice images generated by DALL-E. But I realized that the Titanic is depicted in the background of most of the images, so the penguin couldn't possibly be walking ON the Titanic. So, I repeated my image creation text, with a slight modification. I used ""Photo with penguin in the foreground, walking on the deck of the Titanic" instead.  Now we're talking! The image results are very good. They look realistic and genuine, with appropriately positioned shadows under the penguin even. And for the most part, it gave me exactly what I asked for. By this time, I was informed by the OpenAI website that I had just 10 "credits" remaining, so I stopped creating images. I wanted to "save" my credits for that "just in case" moment that I'd need to "create" other images. Apparently, users are "given" 15 credits per month, and each creation of the four-image sets costs one credit. At least, that's how I understand how the "credits" work. Users can also purchase additional credits. You can get 115 credits for $15 (U.S.). Monthly credits (for users who signed up prior to April 6, 2023) expire one month after they are granted, and do not carry over from month-to-month. Paid credits expire one year after they are purchased. You can read all about how DALL-E credits are used here. And yes, you do have to sign up for an account. I have yet to receive an email from OpenAI (who runs the DALL-E website) beyond the initial account setup. More Than Just DALL-E While DALL-E is just the first to go public with its AI image creation, it's not necessarily the only game in town … or at least, soon won't be. Microsoft's Bing search engine also allows users to create AI images, but it currently uses the OpenAI.org DALL-E to create them. So, everything you've learned about DALL-E applies to those created via Bing. You have to "sign up" on the Bing image creation site, which (according to a disclaimer on the site) also signs you up for emails from Microsoft Rewards. Uhm … no thank you! Unless I tune up my spam filter first. At least they tell you up front that signing up for the image creator also signs you up for a flood of Microsoft spam. Adobe's Firefly is currently in beta. Firefly is touted as being easier to use, although it's difficult to see how much easier AI image creation can be than it is with DALL-E. Interested users can request access here, by filling out the form at the link. Access to the beta will be expanded over time, so signing up will most likely mean that you'll have to "wait in line" for access. I've not been able to uncover any costs associated with the final product. But, knowing Adobe from their track record, access won't come for cheap. Google, believe it or not, is playing catch-up, after falling asleep at the proverbial AI wheel. They have announced that their AI solution is "coming soon," but no date has been revealed at the time of the writing of this article. Amazon has also announced that they have an AI competitor "coming soon." But, like with Google, no date has been revealed at the time of the writing of this article. You can rest assured that you're likely to see other AI products come along as it gains steam. The Danger Zone: Altered Reality The images created by DALL-E are good. Damn good. In fact, maybe they're TOO good. And therein lies the real problem.  GIMP does display EXIF metadata for the image downloaded from DALL-E. To be honest, I've always associated EXIF data with JPG files, so I was quite surprised to find it embedded in the PNG file I downloaded from DALL-E. But how many people are going to even think of looking at or for the metadata included in an image? Plus, all actions I performed on the downloaded image to either resize it or to convert it between formats resulted in the EXIF data being deleted or overwritten. In my experiences, the EXIF data is ONLY available in the image that was originally downloaded from DALL-E. So, with the reality being that most people will never look at (or think of looking at) the metadata embedded in an image, the risk for abuse is literally off the charts. With the photorealism of the images created by AI, it's difficult to ascertain whether an image is real or an AI creation. Perform a couple of actions on the image (other than renaming it), and all you have is someone's word about whether the image is genuine or not. There is a Google Chrome plugin, Fake Profile Detector, that can be used to spot "fake AI generated images." It comes from V7 Labs, and is typically used to help spot fake profiles and profile images. It's not known if it is capable of spotting AI generated images that are NOT profile images of people. It's a start, but I do wonder by what methodology it detects fake images. If it's just the EXIF data we found earlier, that's easy enough to bypass, as we've shown. You can bet your life savings that users with malicious intent have already taken this information and put it in their hat of tricks. It's only a matter of time before bad actors leverage AI created images for their own private gain, to advance their agenda, or to inflict harm on those who think differently. Today's political environment has separated us more than ever before. Disinformation is pervasive on both sides of the political divide. Without some safeguards (provided via legislative measures and AI providers themselves), the proliferation of AI generated content will make it even more difficult to weed out the disinformation from the genuine information. As with most other areas, I doubt that the AI content providers will do an adequate job of self-regulation and self-policing. In just about every instance of self-regulating and self-policing that has been tried, it has failed miserably. We should learn from our past mistakes, but never seem to. And to be perfectly honest, I doubt the success of legislative measures because legislators in most jurisdictions (the ones who write the laws) don't understand the technology that they are attempting to regulate, in most cases. Do you have doubts? Just look at the current harvesting of your private and personal information from the web that continues unchecked by many government and corporate entities, mostly due to legislative inaction. That legislative inaction is fueled by two main factors: ignorance of the technology they are trying to regulate, and graft/payoffs of legislators from corporate lobbyists. What a Catch-22! Then there's the whole concept of AI generated content. When you stop and think about it, AI generated content is scary and unsettling. It's the new great unknown. I've heard it said something to the effect that whoever controls the content, controls the world and controls the minds of the people. Will life imitate art (in particular, the movies)? Like at the end of the Jodie Foster movie of Carl Sagan's Contact, will we be the long lost creators of technology that continues to function long after we are dead and gone, and the civilizations that remain continue to use the technology because it just works? Or will the AI become self-aware, and morph into something resembling Skynet from the Terminator movie series, where there are actual battles between humans and machines? Like I said, it's scary stuff. Remember those three common sayings I began this article with? The AI image creators throw all of those out the proverbial window – baby, bathwater and all. |